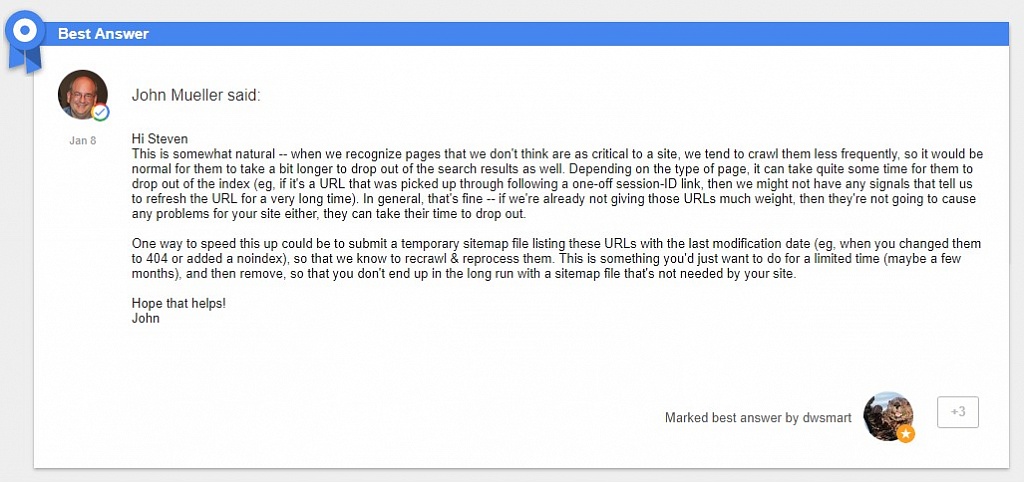

A Google’s representative John Mueller has explained how to remove multiple URLs from the index faster.

Recently, one of the users of Google Webmaster Central Help asked how to remove a bunch of “trash” web pages from the Google index.

John Mueller recommended using a temporary Sitemap file that would have all the URLs with the specified date of the last change. However, he didn’t mention how soon the content would be removed.

SEO–expert commentary:

«The sitemap.xml file allows search robots to scan new or refreshed content fast. One of the main conditions is the XML file format. You can create such a file using standard tools of popular CMS or third-party software such as Screaming Frog or Xenu. You can also do that manually, but in this case, you’ll probably need a professional developer to help you.

After the file is created, you’ll need to add it to the root file of your website. It should be available at site.com/sitemap.xml by default. To force crawling of this file, you need to copy the URL of your Sitemap (sitemap.xml) and add it to Google Search Console.

By the way, an HTML Sitemap and an XML Sitemap are different. Adding an HTML Sitemap will not speed up indexation. Note that an XML Sitemap can’t include more than 50000 URLs -- that’s especially important for big ecommerce stores with hundreds of thousands of URLs».

Technologist SEO-expert of the SEO.RU Company

Konstantin Abdullin